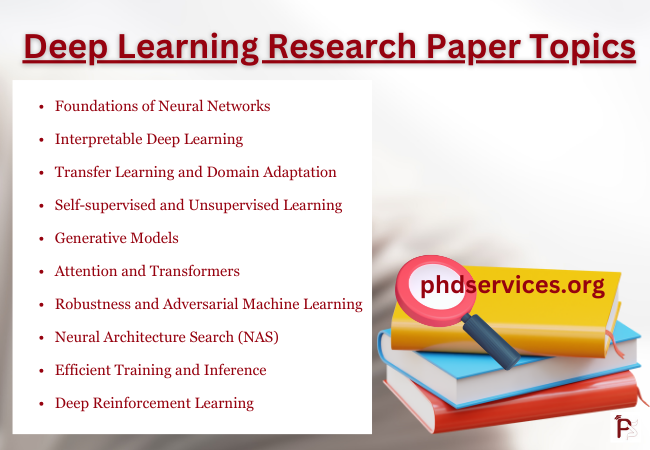

Deep learning has been maintaining as a highly active area of research to explore plenty of topics. Our subject matter experts will describe you in brief about the trending topics so that. A range of open unanswered questions or theories will be covered on the topics that we develop. To write a research paper, consider the following potential topics within the domain of deep learning,

- Foundations of Neural Networks:

These networks understand the capability and limitations of deep neural networks. We examine the working process of deep learning by understanding internal representation, generalization and optimizing landscapes.

- Interpretable Deep Learning:

We utilize this technique for visualizing and to understand the decision-making process of neural networks. Black-box deep learning models are made by us that are interpretable and transparent.

- Transfer Learning and Domain Adaptation:

This method is especially to transfer knowledge from one domain or task to another. We use novel architectures or approaches for few-shot learning.

- Self-supervised and Unsupervised Learning:

Self-supervised and Unsupervised Learning method provides innovative ideas for trained models without the labeled data. Through this, we explore the clustering, contrastive learning or generative methods in unsupervised paradigms.

- Generative Models:

Novel applications of Generative Adversarial Networks (GANs) should be improved. Generative frameworks are examined by us like, Variational Auto encoders (VAEs) or Flow-based models.

- Attention and Transformers:

The internals of attention mechanisms help us to investigate and understand the mechanisms. They enhanced more important or task-oriented transformer architectures.

- Robustness and Adversarial Machine Learning:

By using this method, the adversarial attacks are handled by us and the techniques are utilized to generate more adversarial models.

- Neural Architecture Search (NAS):

This method approaches us to find the optimal network architectures and it transfer the architecture beyond the task.

- Efficient Training and Inference:

To compress neural networks, we apply this technique such as pruning and quantization. It trains the model quicker or on-device conclusion.

- Deep Reinforcement Learning:

The decision-making tasks are performed by integrating deep learning with reinforcement learning. It explores strategies, multi-agent setups or real-world applications.

- Multimodal and Cross-modal Learning:

These models are extracting information from variety of modalities such as vision and audio. We approach this technique to handle the imbalanced data or missed modalities.

- Applications in Medicine:

In the field of medicine, the deep learning technique is applied in medical imaging, drug discovery, or genomics. This method is used by us to handle medical data peculiarities like imbalance, high dimensionality or privacy concerns.

- Ethical and Societal Impacts:

The biases are studied in the deep learning models and open the doorways to reduce them which can access the impacts on society both positive and negative by using this deep learning system.

- Learning with Limited Data:

We are effectively trained the models by using this technique with limited labelled samples. The methods are especially for active learning, data augmentation and semi-supervised learning.

- Neuroscience-inspired Deep Learning:

It describes the similarities between artificial and biological neural networks. The training methods or novel architecture are motivated by the performance of human brain.

If you are choosing a topic, it is important to find the difference in the current articles and there must be latest advancements in area of our interested topic. By reading regular recent publications, discussions with companion and attending conferences a promising research direction can be made. Thesis topics and thesis ideas will share on the trending ideas in deep learning by our experts.

How do you implement a deep learning research paper?

The implementation process of research paper in deep learning is more beneficial and it’s challenging too. The recreation of paper results in, it depicts the variation of paper in the proposed method and to identify the robustness of the paper.

The following points are the step-by-step procedure to implement our process in research paper,

- Select a paper:

- Relevance: Based on our interest or research trends, the paper must be selected.

- Feasibility: We must access the required analytical resources, datasets and tools.

- Thorough Reading:

To learn about the methods, main ideas and variation of paper we must read the paper several times. It is better to take notes especially on hyper parameters, preprocessing steps, model architecture and any distinct.

- Gather Prerequisites:

- Datasets: The datasets are obtained by us that must be exact to the paper. If it is not available, use the related dataset or synthetic data.

- Libraries & Tools: We must have the essential deep learning libraries and frameworks. The general tools include Keras, PyTorch and TensorFlow.

- Computational Resources: It determines the need of Graphics Processing Unit (GPUs) and Tensor Processing Unit (TPUs) which we access to them.

- Break Down the Implementation:

The paper’s method is divided into small tasks or components similar to data preprocessing, evaluation, training and model building etc. Through this, we can handle each task one by one.

- Data Pre-processing:

The step of data augmentation or pre-processing is highlighted in the paper. The data splits must be steady with our paper. E.g.) Training/Validation/Test.

- Model Implementation:

First, we have to build the model architecture and then keenly concentrate the activation functions, layer details and initializations. The loss of functions must be noted. The paper’s specifications are consistent and regularised with optimizers.

- Training:

The hyper parameters should be settled as mentioned in the paper such as learning rate, batch size and epochs etc. If the discussion is based on initialization or training strategies, then implement them relatedly. E.g., learning rate schedules

- Evaluation:

The same principles can be used similar to the paper of model evaluation and then compare our results with the published paper. The slight variations can be acceptable, but significant differences result in implementation error.

- Debugging & Troubleshooting:

To note the training process, we can use visualization tools like TensorBoard.The corrections must be checked through intermediate outputs, feature maps, or any available benchmarks. The models must be saved and log for analysis regularly.

- Documentation:

The implementation process can be tracked with the help of elaborated notes and comments in our code. If we plan to open-source your implementation or collaboration with others, it is highly beneficial.

- Seek Community Help:

If we occur with issues or doubts, then join forums like Stack Overflow, Reddit, or specific GitHub repositories. The community can able to provide solutions to queries through discussions.

- Extended the Implementation:

Once we done with our results then consider extending the paper’s work like testing the new type of datasets or improve the model with better performance. We can also able to hybrid the model with applications or real-world use cases.

- Open Source Your Work:

The implements are considered to be shared on platforms like GitHub and we must provide the original author and give a link which is connected to the paper.

- Feedback to Authors:

If the variations or suggestions are occurred in our paper, then try to connect with original authors to get an immediate solution to clarify us.

Our paper always contains the detailed procedure, so as the part of the challenge we ensure that the implementation process of research papers depends on making advanced decisions about these ignored details.

What are deep learning projects for resume 2023?

Here we suggest our skills and our work experience, a good research paper will indeed raise up your value. Our expert writers provide careful explanation for the application and implementation of the thesis. We critically evaluate and present its uses to scholars, ensuring a strong academic foundation.

- Research on Intrusion Detect System of Internet of Things based on Deep Learning

- Comparison of Single-View and Multi-View Deep Learning for Android Malware Detection

- Detection Approach of Malicious JavaScript Code Based on deep learning

- Design of Interactive System for Autonomous Learning of Business English Majors Based on Deep Learning Algorithms

- Forecasting GDP per capita of OECD countries using machine learning and deep learning models

- Practical Considerations of In-Memory Computing in the Deep Learning Accelerator Applications

- A Comparison of Classical and Deep Learning-based Techniques for Compressing Signals in a Union of Subspaces

- Multi-Robot Exploration in Unknown Environments via Multi-Agent Deep Reinforcement Learning

- A Comprehensive Deep Learning Method for Short-Term Load Forecasting

- Transmission Line Fault Type Identification based on Transfer Learning and Deep Learning

- When Dictionary Learning Meets Deep Learning: Deep Dictionary Learning and Coding Network for Image Recognition with Limited Data

- An Ingenious Deep Learning Approach for Home Automation using TensorFlow Computational Framework

- UbiEi-Edge: Human Big Data Decoding Using Deep Learning on Mobile Edge

- A Proposed Method for Detecting Network Intrusion Using Deep Learning Approach

- A Deep Learning-based System for Detection At-Risk Students

- Edge Accelerator for Lifelong Deep Learning using Streaming Linear Discriminant Analysis

- Applying Deep Learning for Automated Quality Control and Defect Detection in Multi-stage Plastic Extrusion Process

- Non-profiling-based Correlation Optimization Deep Learning Analysis

- Deep Learning for Network Anomalies Detection

- FPGA based Adaptive Hardware Acceleration for Multiple Deep Learning Tasks