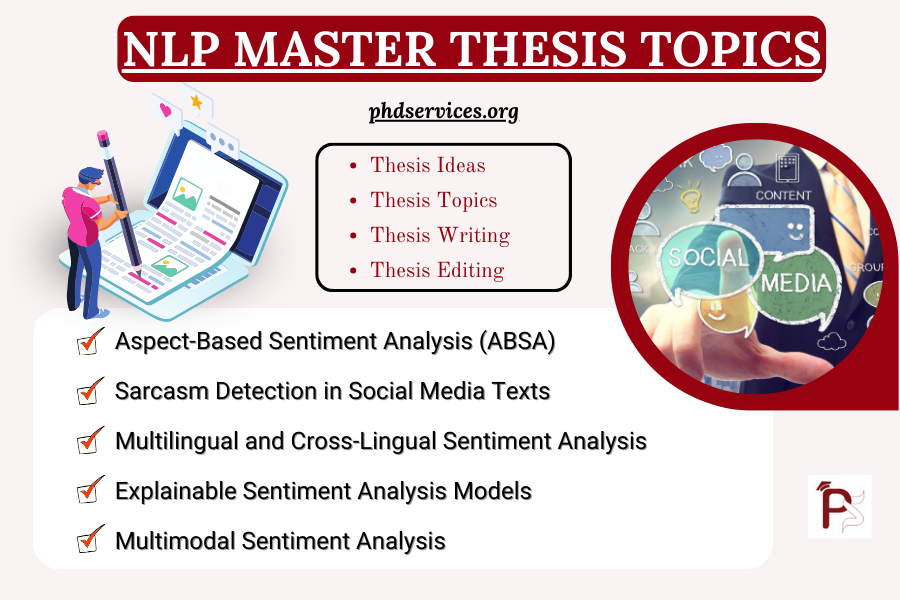

Natural Language Processing (NLP) is extensively deployed in several areas for sentiment analysis, sarcasm detection, bias identification and many more purposes. We recommend few compelling and practically workable research topics on the subject of NLP:

- Aspect-Based Sentiment Analysis (ABSA)

- Definition:

- Regarding the particular perspectives of an entity such as “service” and “food” in restaurant feedback, this research area evaluates the sentiment.

- Research Queries:

- How can deep learning models enhance aspect extraction and polarity categorization?

- How can pre-trained models like BERT be modified for ABSA?

- Techniques:

Aspect Extraction

- BiLSTM-CRF:

- To detect perspectives, it includes a sequence tagging model.

- Bidirectional LSTM layer with a CRF output layer is efficiently applied here.

# BiLSTM-CRF implementation (simplified)

aspect_model = BiLSTM_CRF (vocab_size, embedding_dim, hidden_dim, num_tags)

- BERT-CRF:

- Particularly for aspect extraction, BERT-CRF is designed as a refined BERT model.

- It integrates BERT embeddings with a CRF output layer.

# BERT-CRF Implementation using Transformers

bert_model = BertModel.from_pretrained (‘bert-base-uncased’)

Sentiment Classification:

- Attention-based LSTM:

For sentiment polarity classification, it deploys an attention mechanism across BiLSTM.

# Attention layer

Class Attention (nn.Module):

Def forward (self, x):

BERT for Polarity Classification:

BERT is an optimized model for polarity classification.

Tokenizer = BertTokenizer.from_pretrained (‘bert-base-uncased’)

polarity_model = BertForSequenceClassification.from_pretrained (‘bert-base-uncased’)

- Sarcasm Detection in Social Media Texts

- Definition: For the purpose of transforming the opposite sentiment of their literal interpretation, it detects the mocking comments.

- Research Queries:

- How can contextual embeddings like BERT enhance sarcasm detection?

- What job does external knowledge enacts in interpreting sarcasm and humor?

- Techniques:

Deep Learning

- BiLSTM with Attention:

Specifically for text representation with attention techniques, it deploys bidirectional LSTM.

Class BiLSTM_Attention (nn.Module):

Def forward (self, x):

BERT-based Classifier:

To categorize the sarcasm activities, it efficiently utilizes fine-tuned BERT models.

Tokenizer = BertTokenizer.from_pretrained (‘bert-base-uncased’)

sarcasm_model = BertForSequenceClassification.from_pretrained (‘bert-base-uncased’)

External Knowledge Integration:

- Knowledge-Infused Models:

From knowledge bases such as ConceptNet, this detection system includes sarcasm-based theories.

# infusing ConceptNet into embeddings

concept_embeddings = get_concept_embeddings (text, conceptnet)

sarcasm_features = concatenate ([text_embeddings, concept_embeddings])

- Multilingual and Cross-Lingual Sentiment Analysis

- Definition: Beyond diverse languages, create sentiment analysis frameworks to perform for minimal-resource languages.

- Research Queries:

- How productive are multilingual embeddings for cross-lingual sentiment analysis?

- What data augmentation tactics can enhance sentiment analysis in minimal-resource languages?

- Techniques:

Pre-trained Multilingual Models:

- XLM-R (Cross-Lingual RoBERTa):

Cross-lingual embedding is effectively applied which is trained across several languages.

Tokenizer = XLM RobertaTokenizer.from_pretrained (‘xlm-roberta-base’)

xlm_model = XLMRobertaForSequenceClassification.from_pretrained (‘xlm-roberta-base’)

- mBERT (Multilingual BERT):

It incorporates a BERT model that is trained in advance around 104 languages.

Tokenizer = BertTokenizer.from_pretrained (‘bert-base-multilingual-cased’)

bert_model = BertForSequenceClassification.from_pretrained (‘bert-base-multilingual-cased’)

Data Augmentation:

- Back-Translation:

This analysis translates text to another language and back to formulate paraphrases.

Def back_translate (text, source_lang, target_lang):

- Mixup:

In order to develop an innovative training model, it combines samples and their labels.

Def mixup (x1, x2, y1, y2, and alpha=0.2):

- Explainable Sentiment Analysis Models

- Definition: To offer understandable illustrations for their anticipations, design sentiment analysis models.

- Research Queries:

- How efficient are attention mechanisms and LIME for sentiment model intelligibility?

- Can interpretable models detect and reduce unfairness in sentiment analysis?

- Techniques:

Attention Mechanisms

- Self-Attention:

The significant words which dedicates to the sentiment are specified by using self-attention techniques.

Class Self Attention (nn.Module):

Def forward (self, x):

- Hierarchical Attention Network (HAN):

Considering the word and sentence levels, HAN network is deployed for hierarchical structure with attention mechanisms.

Interpretability Libraries

- LIME (Local Interpretable Model-Agnostic Explanations):

For block models, it offers local descriptions.

From lime import lime_text

Explainer = lime_text.LimeTextExplainer ()

Explanation = explainer.explain_instance (text, model.predict_proba)

- SHAP (SHapley Additive exPlanations):

On the basis of shapley values, it implements Model-agnostic intelligibility.

Import shap

Explainer = shap.KernelExplainer (model.predict_proba, data)

shap_values = explainer. Shap_values (instance)

- Multimodal Sentiment Analysis

- Definition:

As a means to evaluate sentiment, it integrates text, audio and images.

- Research Queries:

- How can multimodal transformers enhance sentiment analysis over text, images, and audio?

- What tactics can efficiently manage modality-specific noise?

- Techniques:

Multimodal Fusion Models:

- Multimodal Transformers (ViLT, VisualBERT):

By employing transformer architectures, it integrates text and image data.

From transformers import VisualBertModel, VisualBertTokenizer

Tokenizer = VisualBertTokenizer.from_pretrained (‘visualbert-vqa’)

vbert_model = VisualBertModel.from_pretrained (‘visualbert-vqa’)

- MISA (Modality-Invariant and Modality-Specific Representations):

As regards each modality, this technique is deployed to interpret constants and certain demonstrations.

Class MISA (nn.Module):

Def forward (self, text, audio, and video):

Feature Extraction:

- Text Features:

Use models such as Glove or BERT to derive text embeddings.

text_embeddings = BertModel.from_pretrained (‘bert-base-uncased’) (input_ids)

- Audio Features:

From audio models, derive properties such as embeddings or MFCCs.

mfcc_features = librosa.feature.mfcc(y=audio, sr=sr, n_mfcc=13)

- Image Features:

Apply CNNs such as VGG or ResNet to derive image characteristics.

image_features = resnet50 (pretrained=True)(images)

- Bias Detection and Mitigation in Sentiment Analysis

- Definition:

The bias in NLP models which is employed for sentiment analysis should be identified and reduced.

- Research Queries:

- What kinds of unfairness are common in current sentiment analysis models?

- How can fairness-aware training techniques decrease bias without crucially affecting the performance?

- Techniques:

Bias Detection:

- Word Embedding Association Test (WEAT):

In word embeddings, WEAT examines if it has any bias.

Def weat_test (embeddings, target1, target2, attribute1, attribute2):

- Gender Bias Evaluation Dataset (GBET):

Beyond gender-based conditions, it assesses sentiment.

Bias Mitigation Techniques:

- Counterfactual Data Augmentation:

It interchanges gender-specific or unfair terms to develop balanced data.

Def augment_with_counterfactuals (text):

- Adversarial Debiasing:

To separate biases from embeddings, it significantly exploits adversarial training.

Class AdversarialDebiasing (nn.Module):

Def forward (self, x):

How do I choose a topic for a master’s thesis about NLP and deep learning in a smart way?

Specify your individual interests and objectives significantly, while you are selecting a topic on NLP (Natural Language Processing) and deep learning. To choose a capable topic, some of the important measures are given below:

- Evaluate Your Passion and Aim

- Own Interest:

- In accordance with the NLP domain, choose the area where you are intriguing about. It may be machine translation, sentiment analysis or question answering.

- Educational Objectives:

- Academia: Seek effective conceptual or novel topics for your project.

- Industry: You must select realistic or applications-based topics.

- Detect Research Gaps

- Recent Surveys/Reviews:

- To detect the rising gap in the domain, interpret the current survey papers.

- Sample: A Survey on Recent Advances in Natural Language Processing with Deep Learning.

- Conference Papers:

- From popular conferences such as NeurIPS, ACL, EMNLP and NAACL, search for recent papers.

- Line up with Accessible Resources

- Datasets:

- For your topic, be sure of datasets whether it is significantly available.

- Computing Power:

- According to computing resources such as cloud services and GPUs, make sure if you have access to utilize.

- Staff’s skills:

- The selected topic must be coordinated with your mentor’s interests and skills.

- Assess Practicality and Implications

- Time Limit:

- Within your thesis timebound, verify the topic whether it can be finished practically.

- Implications:

- Consider the crucial academic accomplishments or possible realistic applications to prefer topics.

Probable Thesis Topics in NLP and Deep Learning

Based on NLP and Deep learning, some of the feasible and promising topics are provided here:

- Multimodal Sentiment Analysis

- Explanation:

In social media posts, identify personal sentiments by integrating images, audio and text.

- Potential Research Gap:

Regarding the multimodal data, it needs to enhance modality fusion tactics and noise handling.

- Applicability:

- Datasets: It involves multimodal datasets such as MOSEAS and MOSEI.

- Models: ViLT or VisualBERT are the included transformers.

- Implications:

Considering the social media analytics, it might be helpful in evaluating the consumer reviews.

- Bias Detection and Mitigation in Language Models

- Explanation:

On the basis of pre-trained models such as T5 and FPT-4, this model detects and reduces the biases.

- Potential Research Gap:

It is crucial to create domain-specific bias identification techniques.

- Applicability:

- Datasets: Custom annotated datasets, StereoSet and WINO Bias are the encompassed datasets.

- Models: Adversarial models, T5 and GPT.

- Implications:

In applications like healthcare services and enlistment, this model verifies the integrity.

- Question Answering over Knowledge Graphs

- Explanation:

Deploy knowledge graphs such as Wikidata to design QA (Question Answering) systems.

- Potential Research Gap:

Graph traversal and reasoning algorithms should be improved.

- Applicability:

- Datasets: This incorporates datasets such as ComplexWebQuestions and WebQuestionsSP.

- Models: KG-BERT and GNNs (Graph Neural Networks).

- Implications:

It is highly applicable in customer guidance and learning systems.

- Domain Adaptation in Natural Language Generation

- Explanation:

As reflecting on novel fields such as ethical or healthcare, use language generation models.

- Potential Research Gap:

The domain transfer techniques and Few-shot learning methods required to be enhanced.

- Applicability:

- Datasets: PubMed Articles and E2E NLG Challenge Dataset are involved.

- Models: T5, transfer learning, GPT-4 models.

- Implications:

This research is greatly adaptable in customer response systems and automated report formulation.

- Neural Text Summarization with Factual Consistency

- Explanation:

For the purpose of verifying authentic consistency, create summarization frameworks.

- Potential Research Gap:

There is a necessity for the development of summarization compatibility and capacity.

- Applicability:

- Datasets: It incorporates PubMed, CNN/Daily Mail and Xsum.

- Models: PEGASUS, GPT-4 and BART.

- Implications:

In report formulation and news outline, it can be utilized.

- Robustness of Language Models Against Adversarial Attacks

- Explanation:

The resilience of pre-trained language models are efficiently improved in this research.

- Potential Research Gap:

It is very essential to enhance data augmentation and adversarial training.

- Applicability:

- Datasets: AG News, GLUE tasks and IMDB might be encompassed.

- Models: RoBERTa, T5 and BERT.

- Implications:

Considering the areas like customer guidance and chatbots, it verifies the security of a model.

- Explainable NLP Models for Legal Document Analysis

- Explanation:

To categorize and outline ethical documents, develop intelligible NLP (Natural Language Processing) models.

- Potential Research Gap:

For legal NLP models, it is crucial to design efficient interpretability techniques.

- Applicability:

- Datasets: Datasets such as LexNLP and Legal Case Reports are included.

- Models: Attention-based networks, RoBERTa and BERT.

- Implications:

In ethical studies and contract analysis, it can be highly applicable.

Final Thoughts

- Feedback Mechanism:

- To refine your topic, share your preferable topics with your guides and mentors to acquire feedback.

- Adaptive Improvement:

- Depending on literature analysis and initial workouts, begin with a wide topic and modify it.

Instance of Topic Selection Progress

- Step 1: Regarding the specific areas, detect your own interest and interpret survey papers.

- Step 2: Provide a list of suitable discussions or meetings and comprehend current papers.

- Step 3: In accordance with accessible resources, select 3-4 capable and noteworthy topics.

- Step 4: Get the guidance of your mentors to share your idea and assure the topic.

- Step 5: Research queries and methodology have to be optimized.

NLP MASTER THESIS IDEAS

For a novice research scholar, choosing a NLP MASTER THESIS IDEAS and topic that is both captivating and suitable for their thesis can be quite overwhelming. At phdservices.org, we understand this dilemma and offer invaluable guidance through our proficient technical team.

- Practice-Based Learning and Improvement: Improving Morbidity and Mortality Review Using Natural Language Processing

- A deep learning and natural language processing-based system for automatic identification and surveillance of high-risk patients undergoing upper endoscopy: A multicenter study

- Detecting a citizens’ activity profile of an urban territory through natural language processing of social media data

- Revenue and Cost Analysis of a System Utilizing Natural Language Processing and a Nurse Coordinator for Radiology Follow-up Recommendations

- Successful implementation of a nurse-navigator–run program using natural language processing identifying patients with an abdominal aortic aneurysm

- Measuring the impact of simulation debriefing on the practices of interprofessional trauma teams using natural language processing

- Looking through glass: Knowledge discovery from materials science literature using natural language processing

- Classification of cervical biopsy free-text diagnoses through linear-classifier based natural language processing

- A method for assisting the accident consequence prediction and cause investigation in petrochemical industries based on natural language processing technology

- Identifying step-level complexity in procedures: Integration of natural language processing into the Complexity Index for Procedures—Step level (CIPS)

- A comprehensive survey of deep learning in the field of medical imaging and medical natural language processing: Challenges and research directions

- Improving knowledge capture and retrieval in the BIM environment: Combining case-based reasoning and natural language processing

- Machine learning based natural language processing of radiology reports in orthopaedic trauma

- A comparative assessment of action plans on antimicrobial resistance from OECD and G20 countries using natural language processing

- A framework for the automatic description of healthcare processes in natural language: Application in an aortic stenosis integrated care process

- Semi-supervised learning with natural language processing for right ventricle classification in echocardiography—a scalable approach

- A design of movie script generation based on natural language processing by optimized ensemble deep learning with heuristic algorithm

- Intelligent and robust computational prediction model for DNA N4-methylcytosine sites via natural language processing

- Natural language processing for automated surveillance of intraoperative neuromonitoring in spine surgery

- A data-driven architecture using natural language processing to improve phenotyping efficiency and accelerate genetic diagnoses of rare disorders