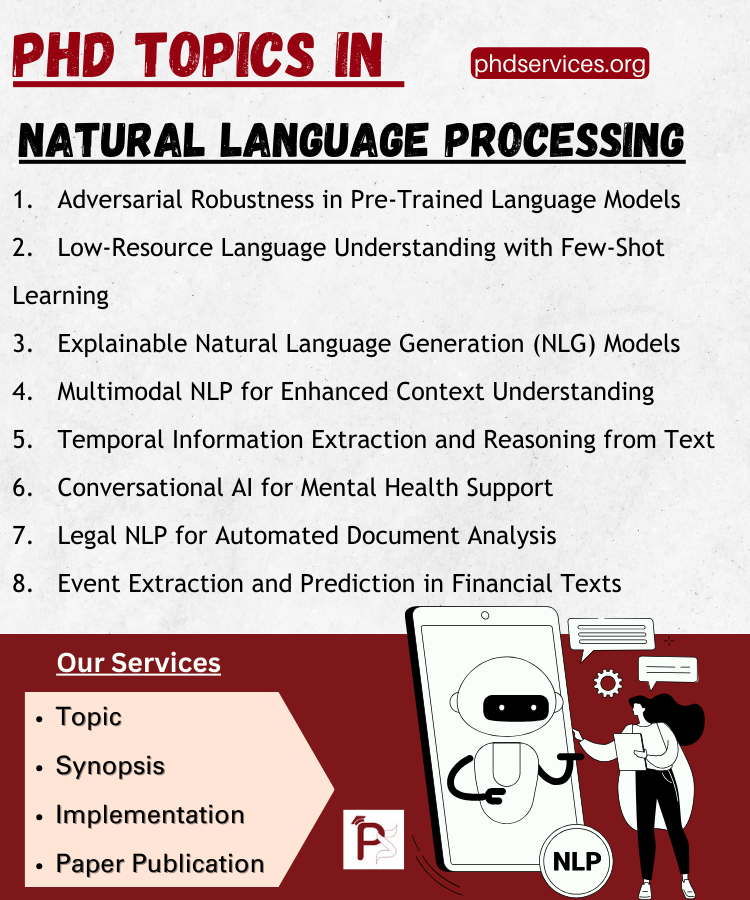

In the domain of Natural Language Processing (NLP), there are several topics that are progressing in recent years. Together with short explanation, research queries, and possible dedications, we offer few of the most popular and advanced topics:

- Adversarial Robustness in Pre-Trained Language Models

- Explanation: In what way adversarial assaults influence pre-trained systems such as GPT-4 and T5 have to be investigated and focus on constructing protective technologies.

- Research Queries:

- What policies can be employed to protect against adversarial assaults in NLP?

- How do adversarial assaults impact language systems interpretation and generation capabilities?

- Possible Dedications:

- It offers strong adversarial training techniques.

- For assessing adversarial strength, aim to make use of a benchmarking model.

- Low-Resource Language Understanding with Few-Shot Learning

- Explanation: To interpret and produce low-resource languages, create appropriate frameworks through the utilization of few-shot learning approaches.

- Research Queries:

- What few-shot learning policies can enhance model effectiveness in low-resource languages?

- How efficient are cross-lingual embeddings for low-resource language interpreting?

- Possible Dedications:

- Few-shot learning models for multilingual systems are the main contribution.

- For low-resource languages, it provides novel explained datasets.

- Explainable Natural Language Generation (NLG) Models

- Explanation: Specifically, for summarization, conversation, and creative writing, aim to develop understandable and explainable NLG systems.

- Research Queries:

- What stability can be struck among explainability and NLG effectiveness?

- How can interpretability approaches enhance the clarity of NLG frameworks?

- Possible Dedications:

- For NLG systems, an explanation model is offered.

- It contributes standard datasets for assessing explainable NLG.

- Bias Mitigation and Fairness in NLP Models

- Explanation: To assure objectivity in downstream applications, focus on identifying and decreasing unfairness in NLP systems.

- Research Queries:

- What policies can be employed to reduce unfairness when sustaining effectiveness?

- How can unfairness be identified and calculated in pre-trained language systems?

- Possible Dedications:

- It provides domain-specific unfairness identification algorithms.

- Generally, fairness-aware system training approaches are determined as the major contribution.

- Knowledge Graph Construction and Reasoning for Question Answering (QA)

- Explanation: For autonomous knowledge graph (KG) creation and interpreting for QA missions, it is appreciable to construct suitable techniques.

- Research Queries:

- What reasoning techniques are efficient for solving complicated multi-hop queries?

- In what way can KGs be automatically created from unorganized terminologies?

- Possible Dedications:

- Novel KG creation and interpreting methods are offered.

- It dedicates domain-specific KGs and QA datasets.

- Multimodal NLP for Enhanced Context Understanding

- Explanation: In what way combining images, audio, and text can enhance content-based interpretation in NLP missions have to be examined.

- Research Queries:

- What limitations occur in coordinating various types for certain NLP missions?

- How can multimodal transformers efficiently combine image, text, and audio data?

- Possible Dedications:

- For setting interpretation, provides multimodal transformer infrastructure.

- Multimodal standard datasets for the assessment process is the key contribution.

- Temporal Information Extraction and Reasoning from Text

- Explanation: Mainly, for time limit creation and incident forecasting, focus on obtaining and interpreting regarding temporal data in terminologies.

- Research Queries:

- What reasoning techniques can gather event series and time limits from terminologies?

- How can temporal expressions and incidents be precisely obtained and standardized?

- Possible Dedications:

- It offers new temporal reasoning methods and standard datasets.

- Temporal information extraction model is examined as a major dedication.

- Conversational AI for Mental Health Support

- Explanation: For psychological welfare tracking and assistance, construct understanding dialogue models.

- Research Queries:

- What moral issues happen in implementing psychological welfare chatbots?

- How can conversational AI identify and react to psychological welfare signals in dialogue?

- Possible Dedications:

- For psychological welfare dialogue models, it contributes standard datasets.

- It provides a model for understanding and assistive conversational agents.

- Legal NLP for Automated Document Analysis

- Explanation: NLP approaches have to be investigated for autonomous analysis of judicial contracts and documents.

- Research Queries:

- What multi-label categorization approaches can enhance judicial document classification?

- How can entity identification and relation extraction be adjusted to judicial terms?

- Possible Dedications:

- Legal document categorization and entity detection model are the key dedication

- Open-source datasets for legal NLP study are provided.

- Event Extraction and Prediction in Financial Texts

- Explanation: From financial news and documents, aim to obtain and forecast market-moving incidents.

- Research Queries:

- What predictive frameworks can gather market patterns on the basis of obtained incidents?

- How can market-moving incidents can be precisely obtained and classified from financial terminologies?

- Possible Dedications:

- It offers event extraction and prediction methods.

- For event-related market forecasting, contributes annotated datasets.

- Cross-Domain Transfer Learning in NLP

- Explanation: To enhance NLP framework generalization, it is approachable to examine cross-domain transfer learning.

- Research Queries:

- What multi-task learning policies can enhance transfer learning among fields and missions?

- How can field adaptation approaches improve NLP framework effectiveness in novel fields?

- Possible Dedications:

- For cross-domain adaptation, it dedicates a transfer learning model.

- Novel multi-domain and multi-task datasets for assessment are the main contribution.

- NLP Models for Long-Form Document Understanding

- Explanation: NLP frameworks have to be constructed in such a manner that contains the capability of interpreting long-form documents such as research papers and books.

- Research Queries:

- What comprehension and summarization approaches are efficient for long-form terminologies?

- How can transformers and graph networks manage long-context dependencies?

- Possible Dedications:

- For long-form document understanding, standard datasets are provided.

- It dedicates long-context transformer infrastructure for document interpretation.

- Neural Methods for Story Generation and Creative Writing

- Explanation: To produce consistent and innovative articles or stories, aim to develop suitable frameworks.

- Research Queries:

- What assessment parameters can evaluate innovation and consistency in generated stories?

- How can extensive language frameworks be adjusted for consistent story generation?

- Possible Dedications:

- By means of employing pre-trained frameworks, it offers a story generation model.

- New standard datasets and assessment parameters are the major dedication.

- NLP-Based Human-Computer Interaction for Accessibility

- Explanation: In order to enhance computer availability for users with incapacities, it is better to construct NLP models.

- Research Queries:

- What moral issues occur in creating NLP frameworks for availability?

- How can speech and text-related models enhance digital availability for visually or hearing-impaired users?

- Possible Dedications:

- It contributes to the available NLP model framework such as speech/text-to-text.

- For available NLP system assessment, it provides standard datasets.

What are some interesting ideas for a research project in Natural Language Processing and or Machine Translation?

In Machine Translation and Natural Language Processing, there are numerous research project ideas. But some are determined as intriguing and efficient. The following are few captivating plans for research projects in Natural Language Processing and Machine Translation:

Research Project Ideas in Natural Language Processing (NLP) and Machine Translation (MT)

- Low-Resource Language Translation with Cross-Lingual Transfer Learning

- Explanation: Through the utilization of skills from high-resource languages, translate low-resource languages by constructing cross-lingual frameworks.

- Research Area:

- It is approachable to make use of zero-shot translation for undetected language pairs.

- For knowledge transfer, consider the efficient utilization of multilingual embeddings.

- Problems:

- Combining cultural settings and idiomatic expressions.

- Data insufficiency and efficient cross-lingual training.

- Possible Dedications:

- For low-resource language pairs, it provides transfer learning policies.

- Assessment model is offered for zero-shot translation frameworks.

- Multimodal Machine Translation (MMT)

- Explanation: By means of combining video and image settings into text translation systems, focus on enhancing translation quality.

- Research Area:

- In order to coordinate text and visual setting, focus on constructing multimodal transformers.

- Typically, modality-specific attention technologies have to be explored.

- Problems:

- Managing noise and lacking visual information.

- Coordinating text-based and visual types precisely.

- Possible Dedications:

- It contributes novel multimodal translation datasets.

- Transformer-related structure for multimodal translation is the major dedication.

- Explainable Neural Machine Translation

- Explanation: To offer understandable descriptions for translation choices, aim to develop NMT frameworks.

- Research Area:

- To emphasize translation choices, formulate attention technologies or latent depictions.

- Focus on constructing interpretability parameters certain to translation missions.

- Problems:

- By means of translation effectiveness, it stabilizes understandability.

- For complicated translation situations, it is better to develop eloquent descriptions.

- Possible Dedications:

- Together with explained descriptions, offer standard datasets.

- The model for explainable neural translation systems is the key contribution.

- Domain Adaptation in Machine Translation

- Explanation: To particular disciplines such as legal, medical, or technical texts, focus on adjusting MT systems.

- Research Area:

- It is appreciable to utilize pre-trained frameworks and domain-specific fine-tuning approaches.

- Active learning or self-training policies have to be deployed.

- Problems:

- Among various fields, assuring coherency of translation.

- Managing vocabulary discrepancies and field changes.

- Possible Dedications:

- Specifically, for MT frameworks, it provides a domain adaptation model.

- It contributes novel annotated datasets for domain-specific MT.

- Data Augmentation for Robust Neural Machine Translation

- Explanation: In order to enhance the strength of translation framework, construct data augmentation approaches.

- Research Area:

- For data augmentation, it is beneficial to employ back-translation and paraphrase generation.

- Aim to make use of adversarial training in order to improve system strength.

- Problems:

- Assessing strength enhancements efficiently.

- Assuring augmented data coordinates along with novel dissemination.

- Possible Dedications:

- Mainly, for MT it provides new data augmentation policies.

- It contributes adversarial strength evaluation parameters.

- Machine Translation Evaluation Metrics with Human-Like Judgement

- Explanation: It is approachable to formulate MT evaluation parameters that relate efficiently together with human decisions.

- Research Area:

- Focus on constructing semantic similarity-related parameters.

- Referential adequacy and contextual embeddings have to be combined in an effective manner.

- Problems:

- Appropriate for various translation missions, aim to develop parameters.

- In the assessment process, stabilize precision and recall.

- Possible Dedications:

- Along with human decisions, novel standard datasets are offered.

- Extensive MT assessment model is determined as signification dedication.

- Sentiment Analysis for Cross-Lingual Social Media Monitoring

- Explanation: Typically, sentiment analysis frameworks have to be constructed in such a way that perform among numerous languages for social media tracking.

- Research Area:

- By employing cross-lingual embeddings, aim to carry out multilingual sentiment analysis.

- For social media text interpretation, make use of domain adaptation.

- Problems:

- Coordinating sentiments among various cultural settings.

- Managing informal language, tone, and mixed-code terminologies.

- Possible Dedications:

- It contributes to a multilingual sentiment analysis model.

- Annotated multilingual social media sentiment datasets are offered.

- Neural Text Simplification for Accessibility

- Explanation: Aim to develop appropriate systems that automatically condense text for availability like legal documents or medical terminologies.

- Research Area:

- For text simplification and paraphrase generation, it is appreciable to utilize pre-trained frameworks.

- Focus on the assessment of understanding and legibility.

- Problems:

- Assessing model-generated simplifications in an efficient manner.

- Stabilizing clarity with precise information depiction.

- Possible Dedications:

- Along with user-friendly descriptions, it dedicates text simplification frameworks.

- Assessment model for understandability and legibility is the key contribution.

- Question Answering (QA) for Cross-Lingual and Multilingual Contexts

- Explanation: It is appreciable to construct QA frameworks that are able to interpret and solve queries among numerous languages.

- Research Area:

- For multilingual QA, employ cross-lingual transfer learning.

- By means of utilizing translation frameworks, carry out arrangement of multilingual QA pairs.

- Problems:

- Assessing QA effectiveness among various languages.

- Managing mixed-language queries and complicated translations.

- Possible Dedications:

- It offers cross-lingual QA datasets with coordinated pairs.

- Multilingual QA evaluation parameters are examined as the main dedication.

- Natural Language Generation (NLG) with Style Transfer and Paraphrasing

- Explanation: To paraphrase text in a precise manner or produce text in certain styles, focus on creating suitable systems.

- Research Area:

- Focus on paraphrase generation along with controlled variables.

- By employing transformers or generative adversarial networks (GANs), style transfer has to be carried out.

- Problems:

- Managing attribute-specific control in text production.

- Assessing style transfer quality and flow.

- Possible Dedications:

- For assessing style transfer, it provides annotated datasets.

- It contributes a model for style transfer and paraphrasing in NLG.

PhD Ideas in Natural Language Processing

We specialize in exploring various categories of concepts while working on PhD Ideas in Natural Language Processing. Our team is committed to undertaking the responsibility of thorough proofreading and editing, guaranteeing that your final work is flawless and refined. As a result, you can confidently showcase your research with lucidity and accuracy, making a lasting impact on both readers and evaluators.

- Syntactic and semantic information extraction from NPP procedures utilizing natural language processing integrated with rules

- Natural language processing of implantable cardioverter-defibrillator reports in hypertrophic cardiomyopathy: A paradigm for longitudinal device follow-up

- Natural language processing-enhanced extraction of SBVR business vocabularies and business rules from UML use case diagrams

- Natural language processing for web browsing analytics: Challenges, lessons learned, and opportunities

- Predicting human–pathogen protein–protein interactions using Natural Language Processing methods

- Machine learning and deep learning-based Natural Language Processing for auto-vetting the appropriateness of Lumbar Spine Magnetic Resonance Imaging Referrals

- Natural language processing of head CT reports to identify intracranial mass effect: CTIME algorithm

- An intelligent patent recommender adopting machine learning approach for natural language processing: A case study for smart machinery technology mining

- Enhancing Optimized Personalized Therapy in Clinical Decision Support System using Natural Language Processing

- A counterfactual thinking perspective of moral licensing effect in machine-driven communication: An example of natural language processing chatbot developed based on WeChat API

- Natural language processing was effective in assisting rapid title and abstract screening when updating systematic reviews

- An intelligent computational model for prediction of promoters and their strength via natural language processing

- Semantic-based padding in convolutional neural networks for improving the performance in natural language processing. A case of study in sentiment analysis

- Multi-domain clinical natural language processing with MedCAT: The Medical Concept Annotation Toolkit

- Knowledge acquisition from chemical accident databases using an ontology-based method and natural language processing

- Natural language processing-based characterization of top-down communication in smart cities for enhancing citizen alignment

- Validation of a natural language processing algorithm to identify adenomas and measure adenoma detection rates across a health system: a population-level study

- Validation of natural language processing to determine the presence and size of abdominal aortic aneurysms in a large integrated health system

- A critical analysis and explication of word sense disambiguation as approached by natural language processing

- Natural language processing and entrustable professional activity text feedback in surgery: A machine learning model of resident autonomy